Today I'm starting a build-log for a new server I'm building which will be going in our home. I'm calling this new server HYEPYC (pronounced high-epic), the name will be explained below.

Before getting into the specifications and build photos I want to detail what the server will be doing.

1. It will be a Hypervisor, which means it will run lots of virtual machines.

2. It will be a storage server with a lot of storage (125TB+).

3. It will be used for machine learning.

4. It will be used for Home Automation (lights, blinds, heating, people recognition etc).

5. It will run a lot of programs with high CPU usage, network needs and storage requirements.

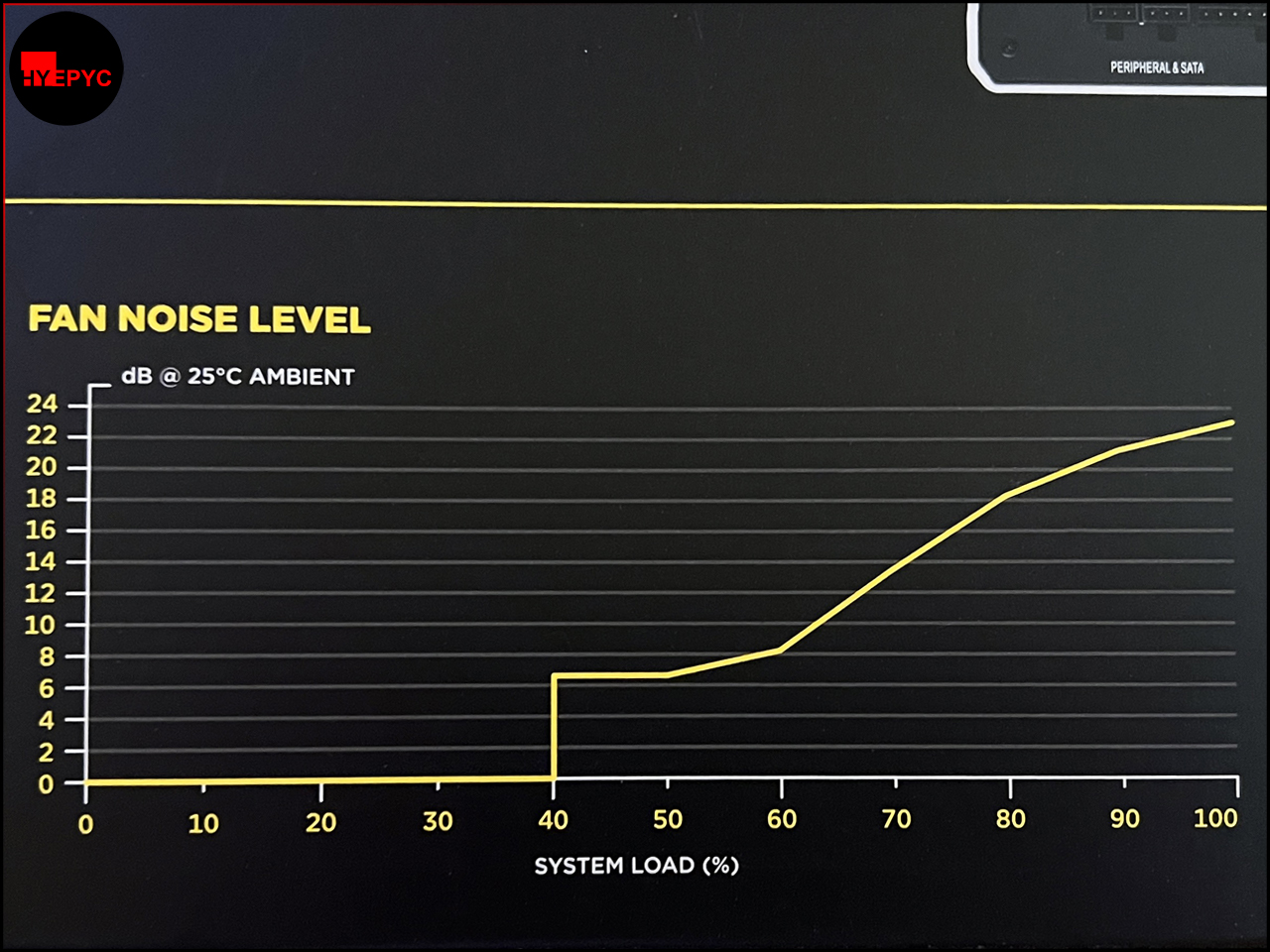

So with all those tasks it needs some beefy components. I'm going to be using a 4U rackmount chassis which has 24 drive bays at the front. This gives a lot of storage expansion and a large area for a server-sized motherboard and large diameter fans which allows for higher performance parts.

Here is the complete spec list

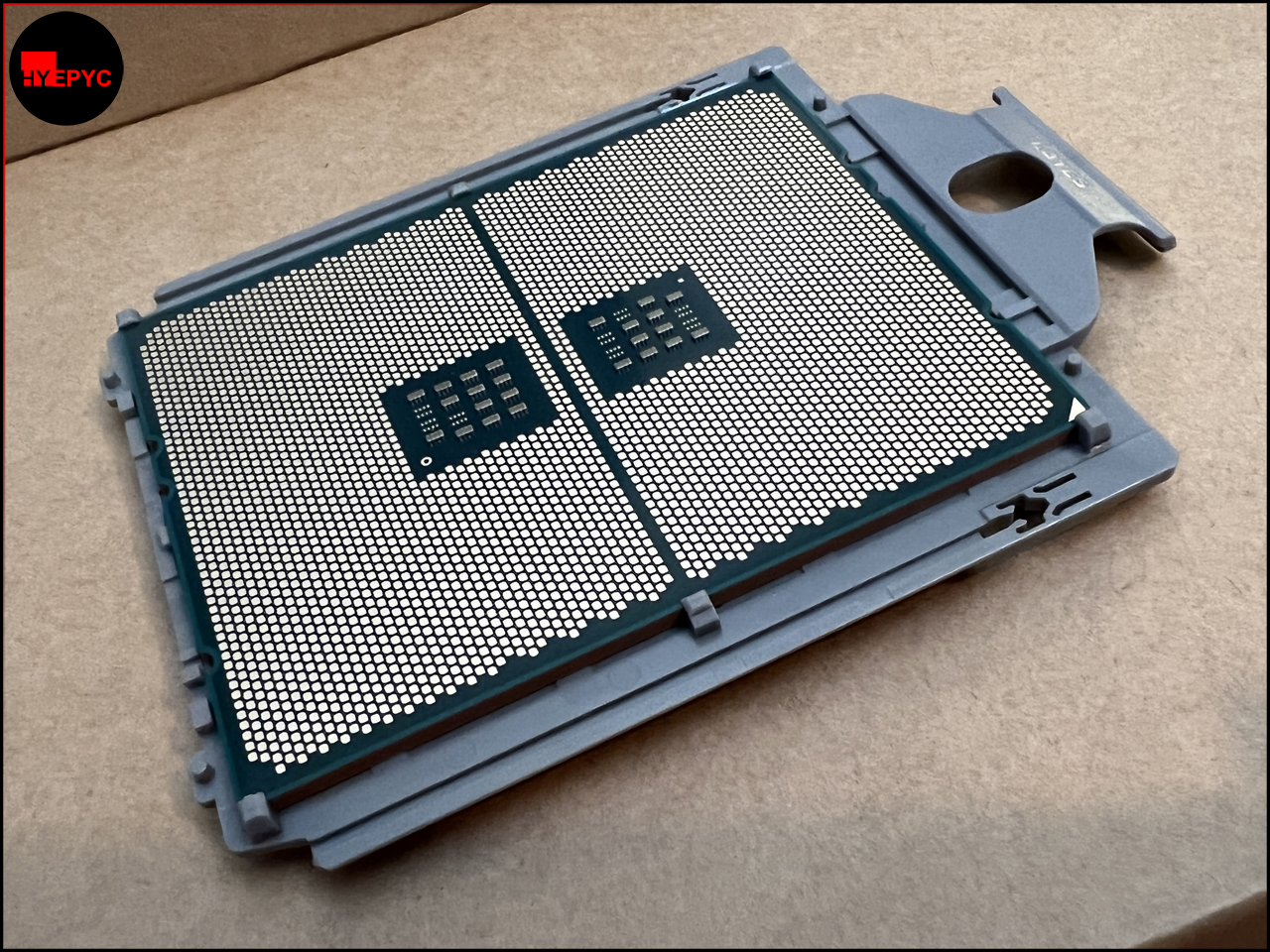

- AMD EPYC Milan 7443p 24 Core / 48 Thread 2.85 GHz to 4 GHz CPU

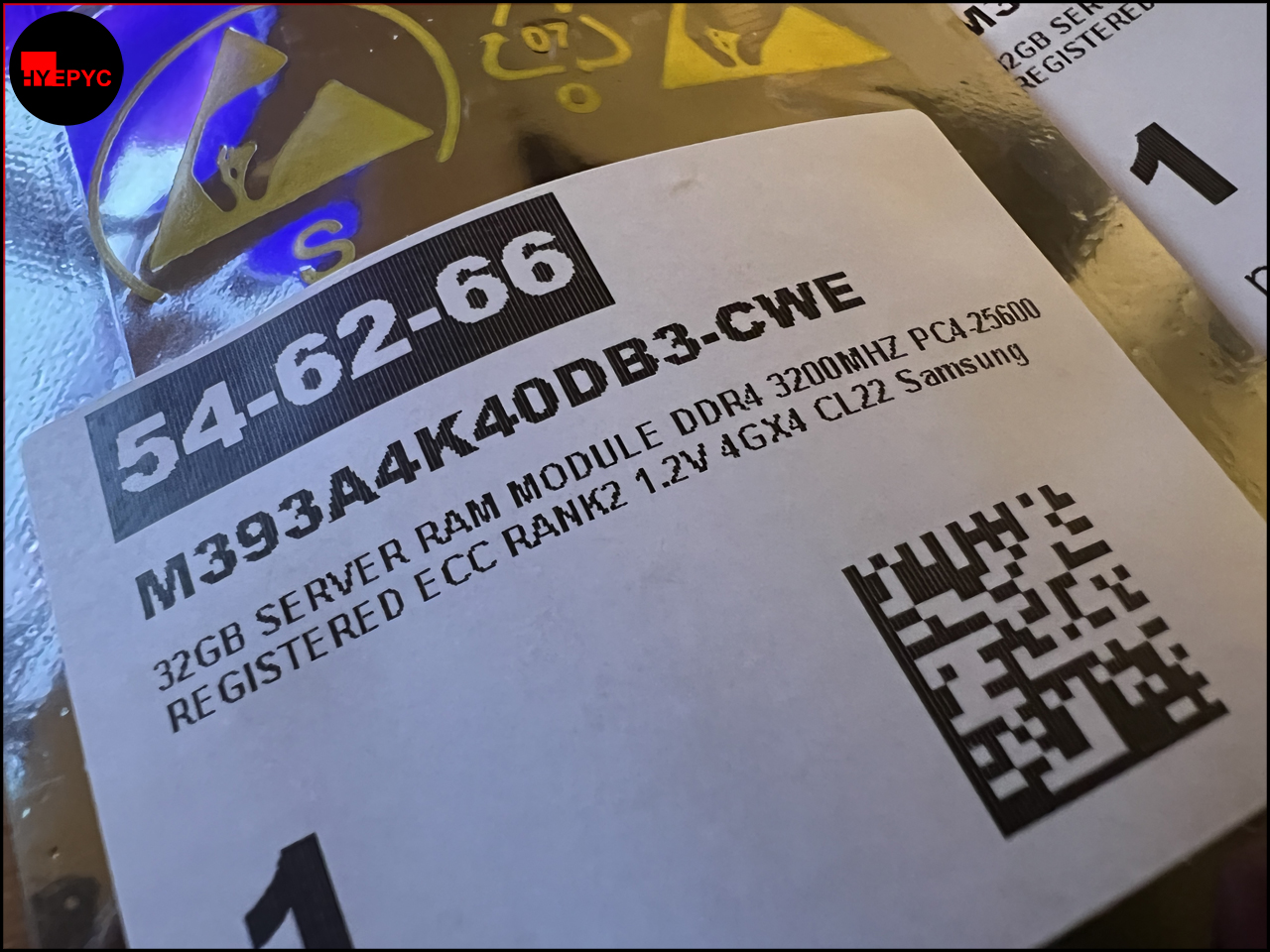

- Samsung 256GB (8x32GB) DDR4 RDIMM ECC 3200MHz CL22 Memory

- Asrockrack ROMED8-T2 EPYC Motherboard

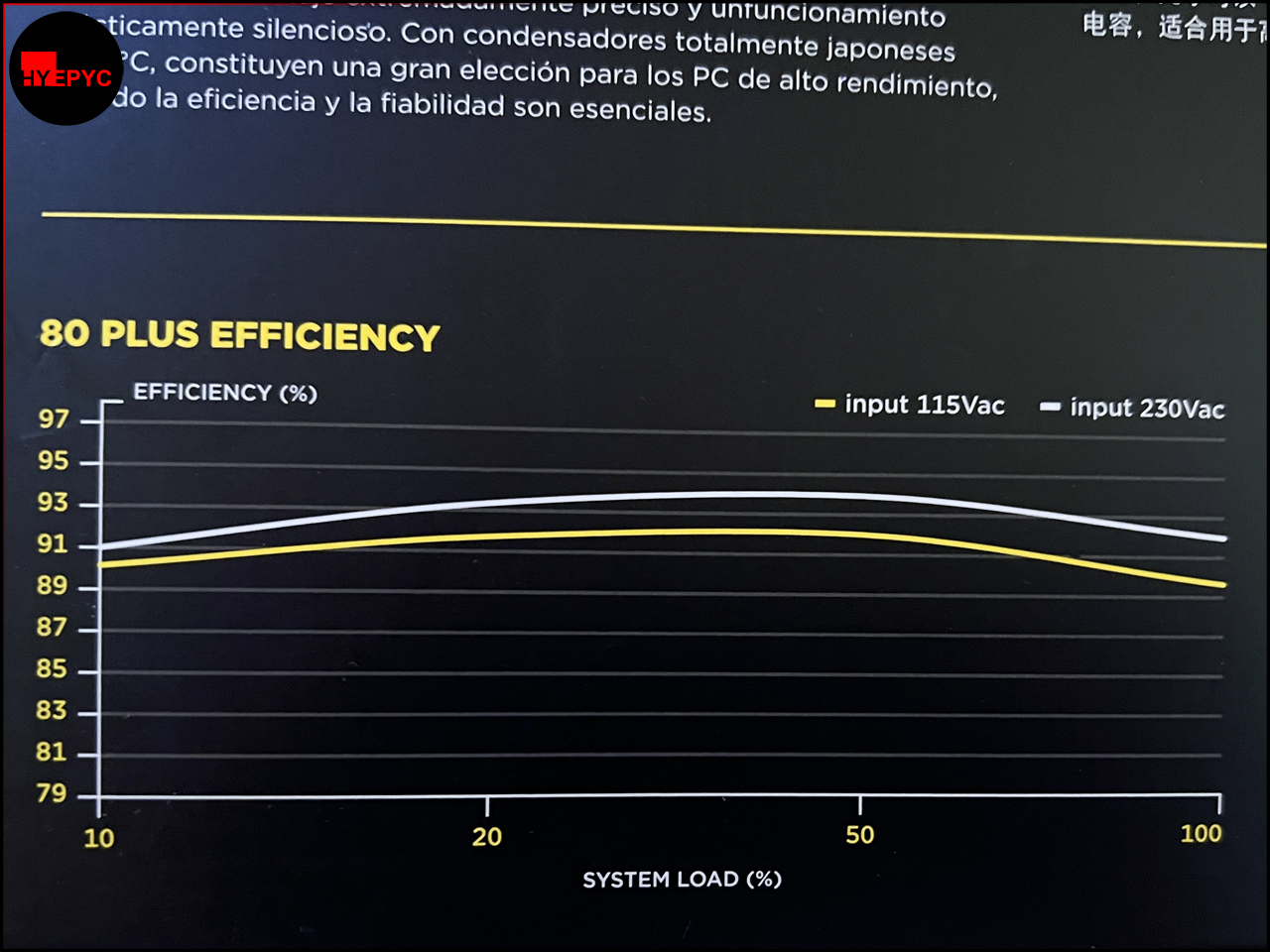

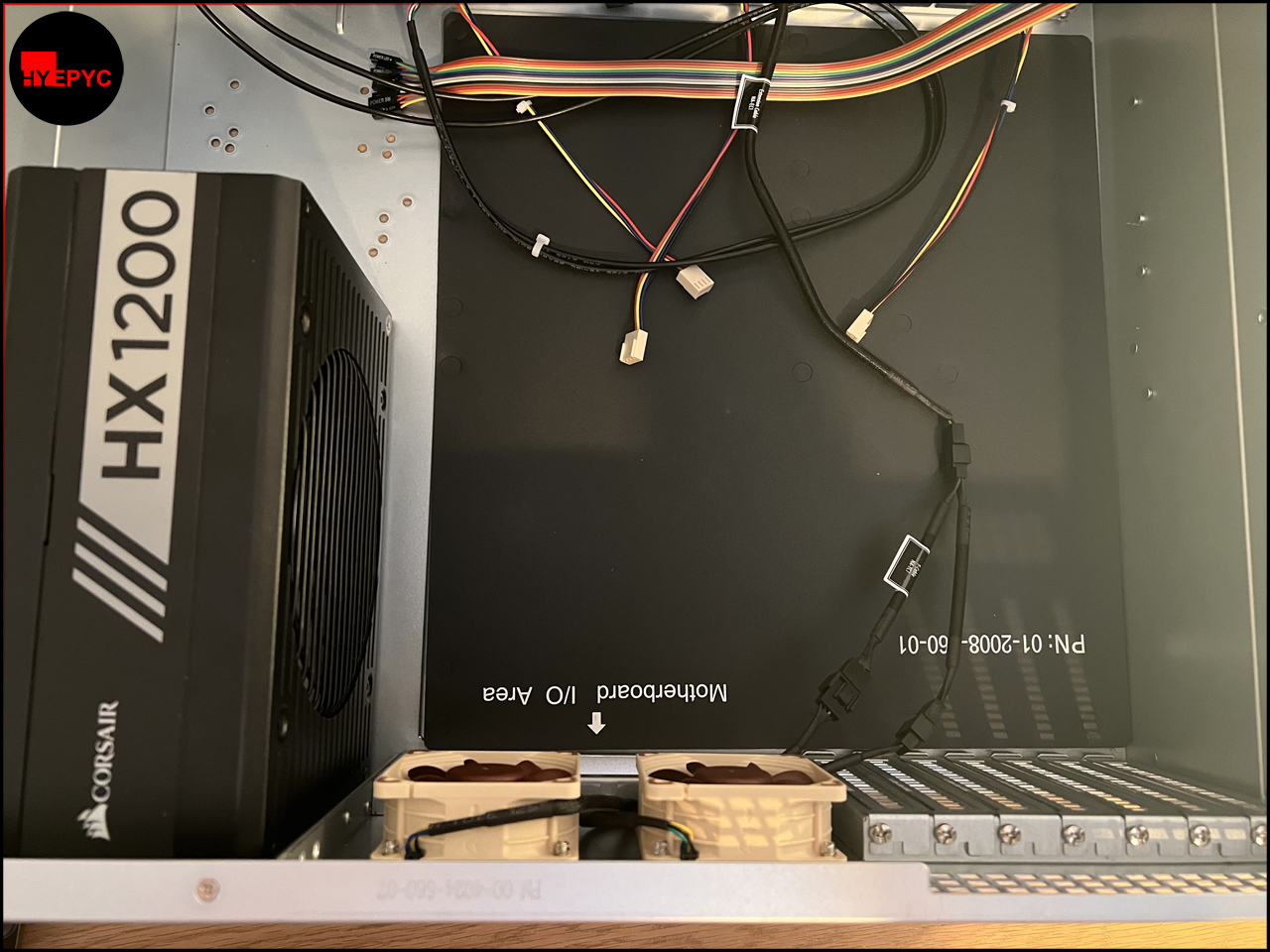

- Corsair HX1200 Platinum 1200 Watt PSU

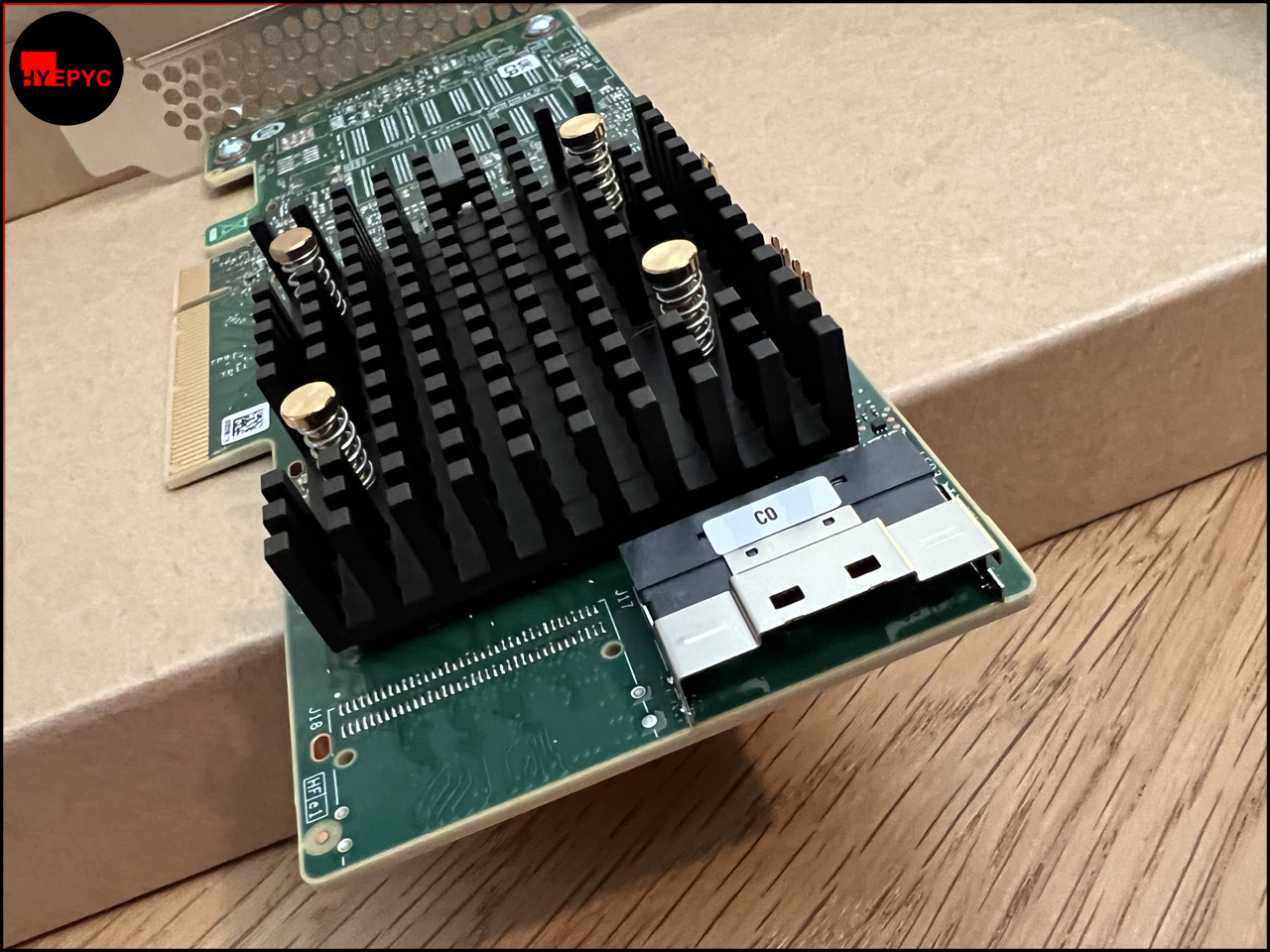

- Broadcom 9500-8i HBA (PCIe 4.0 NVMe/SAS/SATA Disk Controller)

- Samsung PCIe 3.0 2TB 970 Evo Plus NVMe Drive

- Western Digital PCIe 4.0 2TB SN850 NVMe Drive

- Intel PCIe 2.1 X540-T2 2x10Gb/s Network Card

- Toshiba 3x18TB SATA Hard Drives (Enterprise Editions)

- Seagate 7x10TB SATA Hard Drives (NAS Editions)

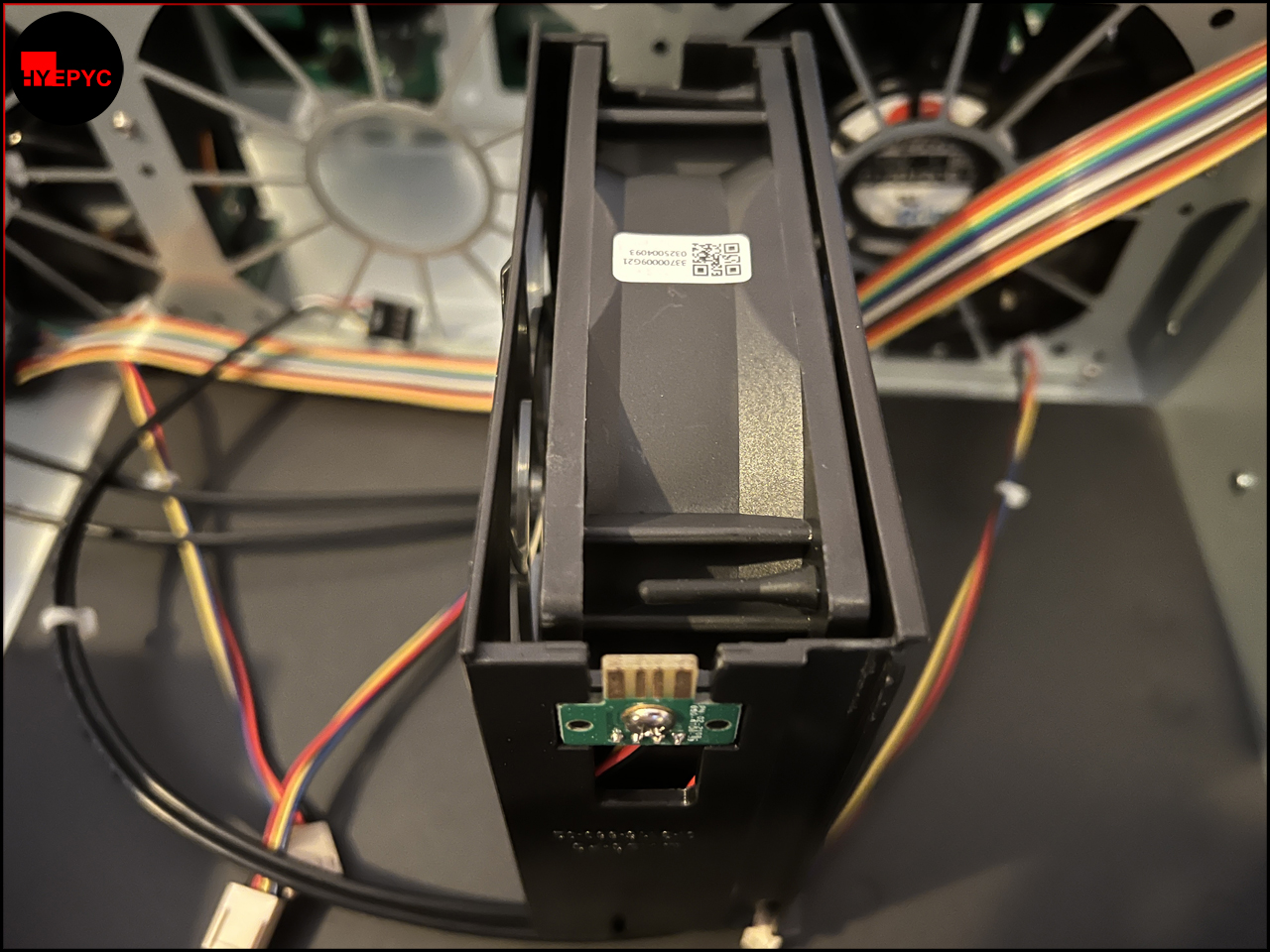

- Gooxi 24-bay Chassis with 12Gb SAS Expander by Areca

Some of the specs from the above have since changed for a few reasons, below is what changed and why.

- Motherboard: Asrockrack ROMED8-T2 was dropped in favour of the Supermicro H12SSL-NT. This board is higher performing, has more features, less bugs/better support and is actually available to purchase while only costing a little bit more.

- NVMe SSD's: The 1 x 2TB SN850 has been altered to 2 x 2TB SN850's. I'm still likely to use the 2TB 970 Evo Plus, but not sure what for. This change happened due to very good Amazon Prime Day deals which essentially halved the cost of the SSD's.

- SATA HDD's: The 3 x 18TB Toshiba drives were switched out for 4 x 18TB WD drives. This was also due to the Prime Day deals. I was able to get 4 x 18TB WD's for a little under the price of 3 x 18TB Toshibas.

This build has very little compromises. I've gone with the second fastest EPYC CPU from AMD when it comes to single-threaded performance (the only one faster has only 8 cores while this one has 24). And it's no slouch when it comes to multithreaded loads either with 24 cores and 48 threads. It will make a great CPU for virtualisation.

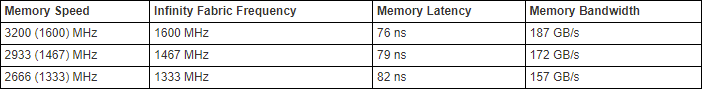

This CPU like all Milan era (Zen3) EPYC's features 128 PCIe 4.0 lanes and 8 memory channels capable of upto 3200MHz speed. I've decided to pair it with that kind of memory, the fastest you can get that is ECC Registered and JEDEC certified right now.

The motherboard I've chosen is unique in that it allows almost total access to all 128 PCIe 4.0 lanes provided by the CPU by featuring 7 full length x16 PCIe 4.0 slots for a total of 112 accessible lanes and each slot supports bifurcation to x8x8 and x4x4x4x4 allowing you to theoretically connect 28 separate PCIe devices each with x4 PCIe 4.0 lanes. This is really great for expanding later with more SSD's in an adapter card which are quite affordable.

Unfortunately that motherboard is in very high demand and so although I placed my order this week I'm not expecting to receive it before August 1st. Similar situation with the Chassis, the reseller I'm using was expecting to receive stock from China on June 15th but as yet no delivery.

Like all the builds I do, I'm often waiting for various parts, even before the war in Ukraine and the Pandemic things have been difficult to get and in this situation something as simple as a cable can hold up the whole build being usable.

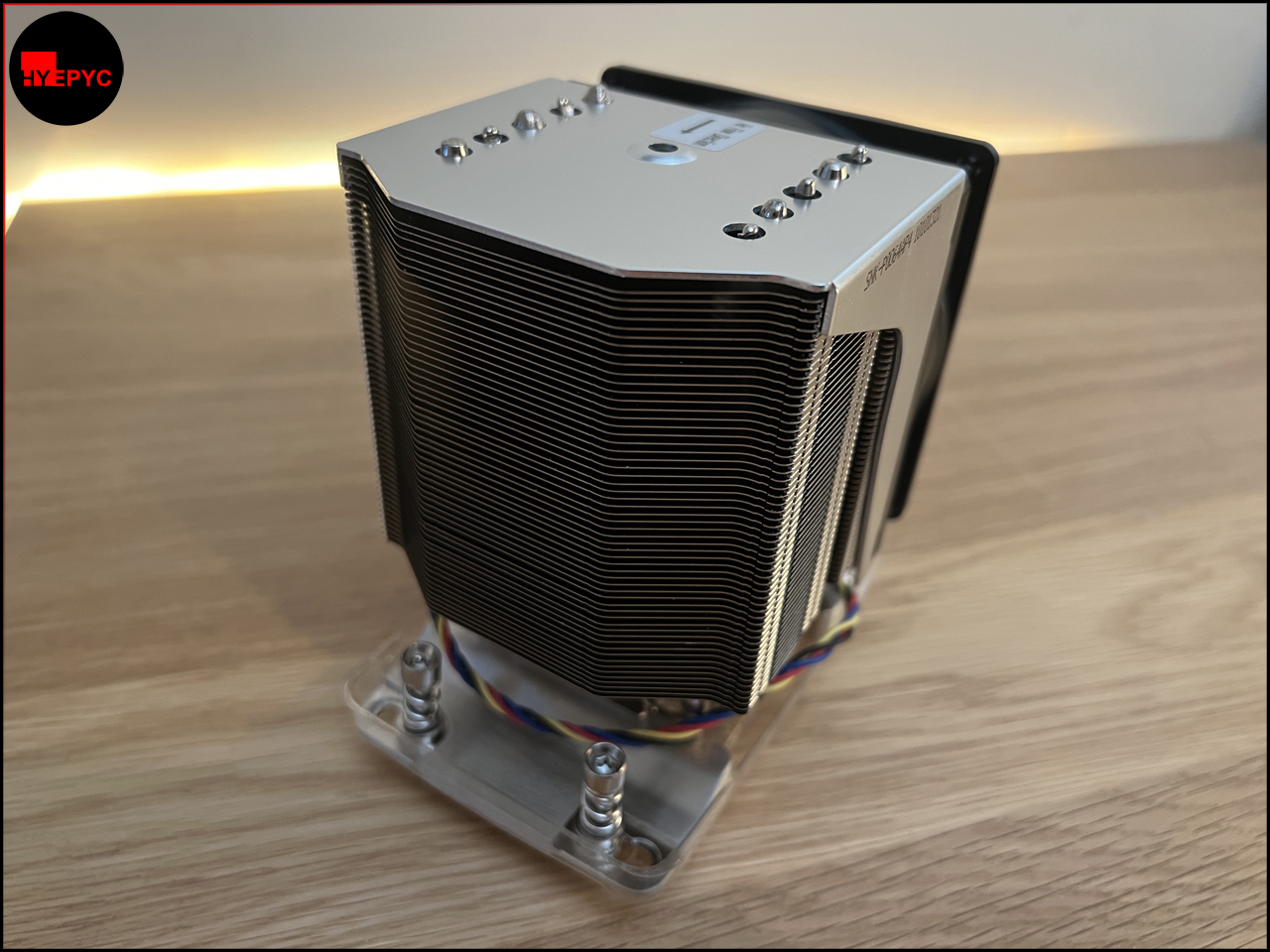

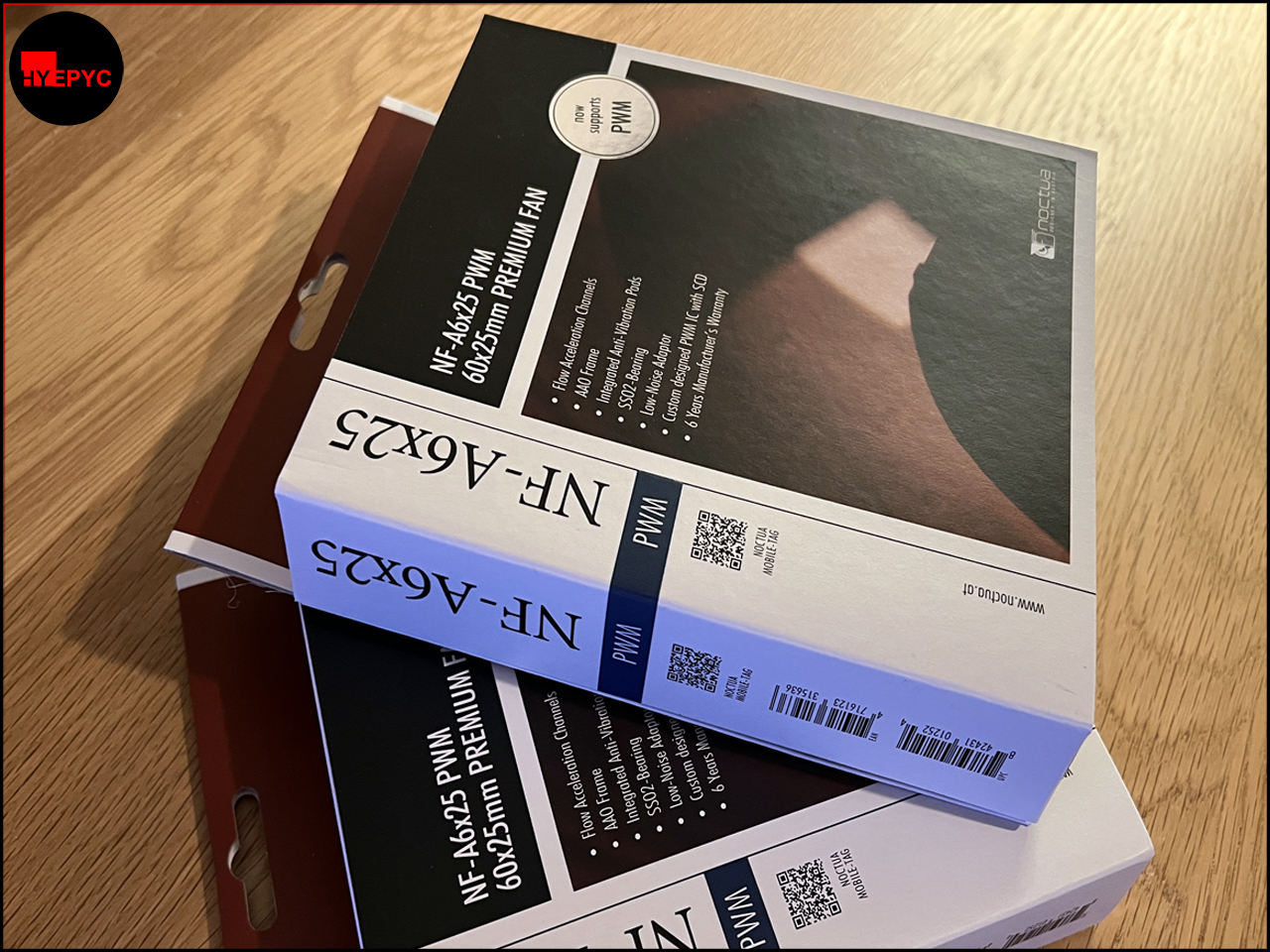

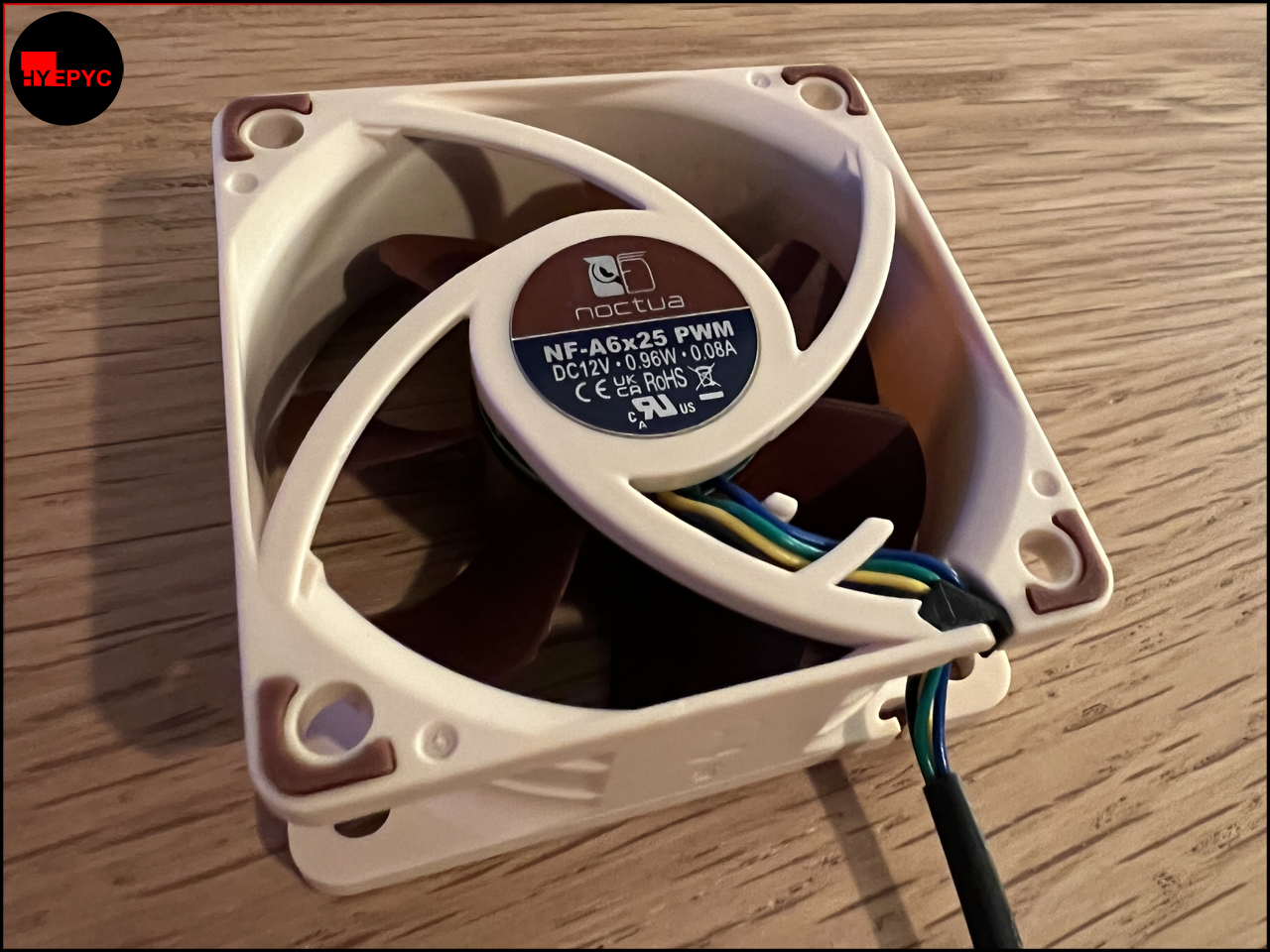

So far I have received the Power Supply, Rack and CPU cooler. Some parts like the 10TB Hard Drives, 970 Evo Plus and Noctua Fans I already have and will be moving from my old server.

You may recall the build log of PROMETHEUS featured here I built that server in 2014 and have been using it ever since. This new HYEPYC server will replace that after 8 years of 24.7 usage which is an excellent run in my opinion. The performance of the new EPYC chips provides 5.4x more performance in multithreaded benchmarks than PROMETHEUS did while using less than half the energy.

Looking past the hardware I will be using Unraid as the operating system for this new system instead of Windows which I used on PROMETHEUS. The main reason for this is Unraid is a great Hypervisor (thus the HY in HYEPYC) and it supports a really great unconventional RAID system which allows you to use differently sized disks and add/remove disks over time at your discretion. Something I've not been able to do with PROMETHEUS due to its hardware RAID card.

I'm quite excited to finish the build but I'm pretty sure it wont be complete until August. Mostly due to the Chassis and Motherboard. Until the next post I'll leave you with a couple of part images for the case, processor and motherboard.